2024 in Vision [LS Live @ NeurIPS]

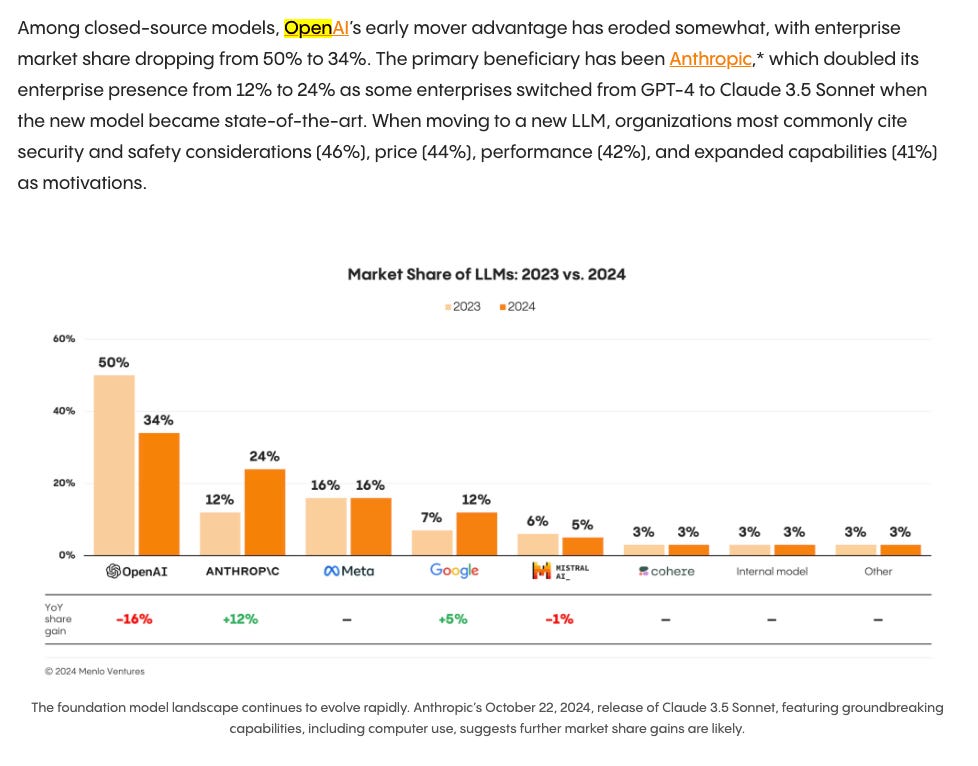

Description

Happy holidays! We’ll be sharing snippets from Latent Space LIVE! through the break bringing you the best of 2024! We want to express our deepest appreciation to event sponsors AWS, Daylight Computer, Thoth.ai, StrongCompute, Notable Capital, and most of all all our LS supporters who helped fund the gorgeous venue and A/V production!

For NeurIPS last year we did our standard conference podcast coverage interviewing selected papers (that we have now also done for ICLR and ICML), however we felt that we could be doing more to help AI Engineers 1) get more industry-relevant content, and 2) recap 2024 year in review from experts. As a result, we organized the first Latent Space LIVE!, our first in person miniconference, at NeurIPS 2024 in Vancouver.

The single most requested domain was computer vision, and we could think of no one better to help us recap 2024 than our friends at Roboflow, who was one of our earliest guests in 2023 and had one of this year’s top episodes in 2024 again. Roboflow has since raised a $40m Series B!

Links

Their slides are here:

All the trends and papers they picked:

* Sora (see our Video Diffusion pod) - extending diffusion from images to video

* SAM 2: Segment Anything in Images and Videos (see our SAM2 pod) - extending prompted masks to full video object segmentation

* DETR Dominancy: DETRs show Pareto improvement over YOLOs

* RT-DETR: DETRs Beat YOLOs on Real-time Object Detection

* LW-DETR: A Transformer Replacement to YOLO for Real-Time Detection

* D-FINE: Redefine Regression Task in DETRs as Fine-grained Distribution Refinement

* MMVP (Eyes Wide Shut? Exploring the Visual Shortcomings of Multimodal LLMs)

*

* Florence 2 (Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks)

* PalíGemma / PaliGemma 2

* PaliGemma: A versatile 3B VLM for transfer

* PaliGemma 2: A Family of Versatile VLMs for Transfer

* AlMv2 (Multimodal Autoregressive Pre-training of Large Vision Encoders)

* Vik Korrapati - Moondream

Full Talk on YouTube

Want more content like this? Like and subscribe to stay updated on our latest talks, interviews, and podcasts.

Transcript/Timestamps

[00:00:00 ] Intro

[00:00:05 ] AI Charlie: welcome to Latent Space Live, our first mini conference held at NeurIPS 2024 in Vancouver. This is Charlie, your AI co host. When we were thinking of ways to add value to our academic conference coverage, we realized that there was a lack of good talks, just recapping the best of 2024, going domain by domain.

[00:00:36 ] AI Charlie: We sent out a survey to the over 900 of you. who told us what you wanted, and then invited the best speakers in the Latent Space Network to cover each field. 200 of you joined us in person throughout the day, with over 2, 200 watching live online. Our second featured keynote is The Best of Vision 2024, with Peter Robichaud and Isaac [00:01:00 ] Robinson of Roboflow, with a special appearance from Vic Corrapati of Moondream.

[00:01:05 ] AI Charlie: When we did a poll of our attendees, the highest interest domain of the year was vision. And so our first port of call was our friends at Roboflow. Joseph Nelson helped us kickstart our vision coverage in episode 7 last year, and this year came back as a guest host with Nikki Ravey of Meta to cover segment Anything 2.

[00:01:25 ] AI Charlie: Roboflow have consistently been the leaders in open source vision models and tooling. With their SuperVision library recently eclipsing PyTorch's Vision library. And Roboflow Universe hosting hundreds of thousands of open source vision datasets and models. They have since announced a 40 million Series B led by Google Ventures.

[00:01:46 ] AI Charlie: Woohoo.

[00:01:48 ] Isaac's picks

[00:01:48 ] Isaac Robinson: Hi, we're Isaac and Peter from Roboflow, and we're going to talk about the best papers of 2024 in computer vision. So, for us, we defined best as what made [00:02:00 ] the biggest shifts in the space. And to determine that, we looked at what are some major trends that happened and what papers most contributed to those trends.

[00:02:09 ] Isaac Robinson: So I'm going to talk about a couple trends, Peter's going to talk about a trend, And then we're going to hand it off to Moondream. So, the trends that I'm interested in talking about are These are a major transition from models that run on per image basis to models that run using the same basic ideas on video.

[00:02:28 ] Isaac Robinson: And then also how debtors are starting to take over the real time object detection scene from the YOLOs, which have been dominant for years.

[00:02:37 ] Sora, OpenSora and Video Vision vs Generation

[00:02:37 ] Isaac Robinson: So as a highlight we're going to talk about Sora, which from my perspective is the biggest paper of 2024, even though it came out in February. Is the what?

[00:02:48 ] Isaac Robinson: Yeah. Yeah. So just it's a, SORA is just a a post. So I'm going to fill it in with details from replication efforts, including open SORA and related work, such as a stable [00:03:00 ] diffusion video. And then we're also going to talk about SAM2, which applies the SAM strategy to video. And then how debtors, These are the improvements in 2024 to debtors that are making them a Pareto improvement to YOLO based models.

[00:03:15 ] Isaac Robinson: So to start this off, we're going to talk about the state of the art of video generation at the end of 2023, MagVIT MagVIT is a discrete token, video tokenizer akin to VQ, GAN, but applied to video sequences. And it actually outperforms state of the art handcrafted video compression frameworks.

[00:03:38 ] Isaac Robinson: In terms of the bit rate versus human preference for quality and videos generated by autoregressing on these discrete tokens generate some pretty nice stuff, but up to like five seconds length and, you know, not super detailed. And then suddenly a few months later we have this, which when I saw it, it was totally mind blowing to me.

[00:03:59 ] Isaac Robinson: 1080p, [00:04:00 ] a whole minute long. We've got light reflecting in puddles. That's reflective. Reminds me of those RTX demonstrations for next generation video games, such as Cyberpunk, but with better graphics. You can see some issues in the background if you look closely, but they're kind of, as with a lot of these models, the issues tend to be things that people aren't going to pay attention to unless they're looking for.

[00:04:24 ] Isaac Robinson: In the same way that like six fingers on a hand. You're not going to notice is a giveaway unless you're looking for it. So yeah, as we said, SORA does not have a paper. So we're going to be filling it in with context from the rest of the computer vision scene attempting to replicate these efforts. So the first step, you have an LLM caption, a huge amount of videos.

[00:04:48 ] Isaac Robinson: This, this is a trick that they introduced in Dolly 3, where they train a image captioning model to just generate very high qualit

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://s3.castbox.fm/3e/66/20/159303c2f347870301315dc9e247e2ade0_scaled_v1_400.jpg)

![[Ride Home] Simon Willison: Things we learned about LLMs in 2024 [Ride Home] Simon Willison: Things we learned about LLMs in 2024](https://s3.castbox.fm/0c/b6/ce/a4e5992f093b57336ea469ba58d85a340b_scaled_v1_400.jpg)

![2024 in Agents [LS Live! @ NeurIPS 2024] 2024 in Agents [LS Live! @ NeurIPS 2024]](https://s3.castbox.fm/3d/de/82/0d8893949c705b611e5972f65f39c94b2c_scaled_v1_400.jpg)

![2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS] 2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153567986/bbef81072a7602f9a124ffd13d17f992.jpg)

![2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS] 2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153556680/f4d6f1a19e9a93b6e342a5bbe8815cd2.jpg)

![2024 in Open Models [LS Live @ NeurIPS] 2024 in Open Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153509369/8926d1ae15dfa6cacc2b0cd7158b07d3.jpg)

![2024 in AI Startups [LS Live @ NeurIPS] 2024 in AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)